How to Transcribe a Recording to Text

How to Transcribe a Recording to Text Our no-code transcription tool enables you to convert an audio recording to text in only two steps. Find

Natural language processing is the field of helping computers understand written and spoken words in the way humans do. It was the development of language and communication that led to the rise of human civilization, so it’s only natural that we want computers to advance in that aspect too.

However, even we humans find it challenging to receive, interpret, and respond to the overwhelming amount of language data we experience on a daily basis.

If computers could process text data at scale and with human-level accuracy, there would be countless possibilities to improve human lives. In recent years, natural language processing has contributed to groundbreaking innovations such as simultaneous translation, sign language to text converters, and smart assistants such as Alexa and Siri.

However, understanding human languages is difficult because of how complex they are. Most languages contain numerous nuances, dialects, and regional differences that are difficult to standardize when training a machine model.

The field is getting a lot of attention as the benefits of NLP are understood more which means that many industries will integrate NLP models into their processes in the near future.

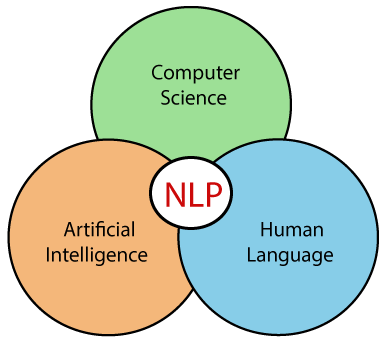

Natural language processing (NLP) refers to using computers to process and analyze human language. You can think of natural language processing as the overlapping branch between computer science, artificial intelligence, and human linguistics (pictured above)

The main purpose of natural language processing is to engineer computers to understand and even learn languages as humans do. Since machines have better computing power than humans, they can process text data and analyze them more efficiently.

Thus, natural language processing allows language-related tasks to be completed at scales previously unimaginable.

The concept of natural language processing emerged in the 1950s when Alan Turing published an article titled “Computing Machinery and Intelligence”. Turing was a mathematician who was heavily involved in electrical computers and saw its potential to replicate the cognitive capabilities of a human.

Turing’s idea was reflected by the question he began his article with: “What if computers could think?” To that end, he devised the Turing test to test if a machine can be considered “intelligent”, i.e., testing a machine’s ability to behave as a thinking human would.

To test his hypothesis, Turing created the “imitation game” where a computer and a woman attempt to convince a man that they are human. The man must guess who’s lying by inferring information from exchanging written notes with the computer and the woman.

In order to fool the man, the computer must be capable of receiving, interpreting, and generating words – the core of natural language processing. Turing claimed that if a computer could do that, it would be considered intelligent.

Text mining (or text analytics) is often confused with natural language processing.

NLP is involved with analyzing natural human communication – texts, images, speech, videos, etc.

Text analytics is only focused on analyzing text data such as documents and social media messages.

Both text mining and NLP ultimately serve the same function - to extract information from natural language to obtain actionable insights.

Natural language processing models are trained through machine learning. Simply put, the NLP algorithm follows predetermined rules and gets fed textual data. Through continuous feeding, the NLP model improves its comprehension of language and then generates accurate responses accordingly.

Natural language processing involves two main components, natural language understanding (NLU), and natural language generation (NLG).

Both NLU and NLG are subsets of natural language processing where the overall process looks something like:

Natural language processing (NLP) = NLU (analyzing input/text data) + NLG (producing output text)

In natural language understanding, the machine focuses on breaking down text data and interpreting its meaning. Natural language understanding involves three linguistic levels to understand text data:

An example of NLU is when you ask Siri “what is the weather today”, and it breaks down the question’s meaning, grammar, and intent. An AI such as Siri would utilize several NLP techniques during NLU, including lemmatization, stemming, parsing, POS tagging, and more which we’ll discuss in more detail later.

After the AI has analyzed a text input, natural text generation occurs.

Natural text generation refers to producing structured text data that humans can easily understand. For example, Siri would reply to the above question with “It is currently 77-degree Fahrenheit with a high chance of rain.”

Earlier, we discussed how natural language processing can be compartmentalized into natural language understanding and natural language generation. However, these two components involve several smaller steps because of how complicated the human language is.

The 5 main steps of natural language processing are:

However, natural language analysis is only one-half of the story. An important but often neglected aspect of NLP is generating an accurate and reliable response. Thus, the above NLP steps are accompanied by natural language generation (NLG).

Text preprocessing is the first step of natural language processing and involves cleaning the text data for further processing. To do so, the NLP machine will break down sentences into sub-sentence bits and remove noise such as punctuation and emotions.

Another necessity of text preprocessing is the diversity of the human language. Other languages such as Mandarin and Japanese do not follow the same rules as the English language. Thus, the NLP model must conduct segmentation and tokenization to accurately identify the characters that make up a sentence, especially in a multilingual NLP model.

Morphological and lexical analysis refers to analyzing a text at the level of individual words. To better understand this stage of NLP, we have to broaden the picture to include the study of linguistics.

In linguistics (the study of language), morpheme refers to the smallest meaningful part of a word. These parts combine together to form a word and its meaning. For example, morphemes of “unadjustable” would be:

“un” | “adjust” | “able” |

On the other hand, lexical analysis involves examining lexical – what words mean. Words are broken down into lexemes and their meaning is based on lexicons, the dictionary of a language. For example, “walk” is a lexeme and can be branched into “walks”, “walking”, and “walked”.

Syntactic analysis (also known as parsing) refers to examining strings of words in a sentence and how they are structured according to syntax – grammatical rules of a language. These grammatical rules also determine the relationships between the words in a sentence.

For example, the sentence “The old man was driving a car.” can be tagged as:

“the” | “old” | “man” | “was” | “driving” | “a” | “car” | “.” |

determiner | adjective | noun | auxiliary verb | verb | determiner | noun | punctuation |

Semantic analysis refers to understanding the literal meaning of an utterance or sentence. It is a complex process that depends on the results of parsing and lexical information.

While reasoning the meaning of a sentence is commonsense for humans, computers interpret language in a more straightforward manner. This results in multiple NLP challenges when determining meaning from text data.

One such challenge is how a word can have several definitions that depending on how it’s used, will drastically change the sentence’s meaning. For example, “run” can either mean “manage”, “sprint”, or “publish”.

Pragmatic analysis refers to understanding the meaning of sentences with an emphasis on context and the speaker’s intention. Other elements that are taken into account when determining a sentence’s inferred meaning are emojis, spaces between words, and a person’s mental state.

You can think of an NLP model conducting pragmatic analysis as a computer trying to perceive conversations as a human would. When you interpret a message, you’ll be aware that words aren’t the sole determiner of a sentence’s meaning. Pragmatic analysis is essentially a machine’s attempt to replicate that thought process.

Natural language processing technically has 5 steps and ends with pragmatic analysis. However, we cannot ignore the main reason why NLP was created in the first place: to make computers think and behave intelligently like humans.

Natural language generation refers to an NLP model producing meaningful text outputs after internalizing some input. For example, a chatbot replying to a customer inquiry regarding a shop’s opening hours.

The above steps are parts of a general natural language processing pipeline. However, there are specific areas that NLP machines are trained to handle. These tasks differ from organization to organization and are heavily dependent on your NLP needs and goals.

That said, there are dozens of natural language processing tasks. Rather than covering the full list of NLP tasks, we’ll only go through 13 of the most common ones in an NLP pipeline:

Automatic speech recognition is one of the most common NLP tasks and involves recognizing speech before converting it into text. While not human-level accurate, current speech recognition tools have a low enough Word Error Rate (WER) for business applications.

Text-to-speech is the reverse of ASR and involves converting text data into audio. Like speech recognition, text-to-speech has many applications, especially in childcare and visual aid. TTS software is an important NLP task because it makes content accessible.

By making your content more inclusive, you can tap into neglected market share and improve your organization’s reach, sales, and SEO. In fact, the rising demand for handheld devices and government spending on education for differently-abled is catalyzing a 14.6% CAGR of the US text-to-speech market.

Text classification refers to tagging a pre-defined category to text. Machine learning models often separate large chunks of documents and text data into categories, resulting in optimized workflow processes and actionable insights. For example, classifying incoming emails as spam or non-spam.

Sentiment analysis, or opinion mining, is the process of determining a subject’s feelings from a given text. An NLP algorithm assigns sentiment scores to words or sentences based on a lexicon (a list of words and their associated feelings).

Then, the sentiment analysis model will categorize the analyzed text according to emotions (sad, happy, angry), positivity (negative, neutral, positive), and intentions (complaint, query, opinion).

Word sense disambiguation (WSD) refers to identifying the correct meaning of a word based on the context it’s used in. For example, “bass” can either be a musical instrument or fish. Like sentiment analysis, NLP models use machine learning or rule-based approaches to improve their context identification.

Stopword removal is part of preprocessing and involves removing stopwords – the most common words in a language. For example, “a”, “on”, “the”, “is”, “where”, etc. However, removing stopwords is not 100% necessary because it depends on your specific task at hand.

For instance, stopwords do not provide meaningful data in text classification but give important context for translation machines.

Tokenization refers to separating sentences into discrete chunks. NLP machines commonly compartmentalize sentences into individual words, but some separate words into characters (e.g., h, i, g, h, e, r) and subwords (e.g., high, er).

Tokenization is also the first step of natural language processing and a major part of text preprocessing. Its main purpose is to break down messy, unstructured data into raw text that can then be converted into numerical data, which are preferred by computers over actual words.

For example, the sentence “What is natural language processing?” can be tokenized into five tokens: [‘what’, ’is’, ’natural’, ’language’, ‘processing’]. The natural language processing machine then takes these individual tokens for further analysis.

Lemmatization refers to tracing the root form of a word, which linguists call a lemma. These root words are easier for computers to understand and in turn, help them generate more accurate responses. For example, the lemma of “jumping”, “jumper”, and “jumpily” is “jump”.

Stemming is the process of removing the end or beginning of a word while taking into account common suffixes (-ment, -ness, -ship) and prefixes (under-, down-, hyper-). Both stemming and lemmatization attempt to obtain the base form of a word.

However, stemming only removes prefixes and suffixes from a word but can be inaccurate sometimes. On the other hand, lemmatization considers a word’s morphology (how a word is structured) and its meaningful context.

To illustrate, here’s what the stem and lemma of various inflected forms of “change” look like:

POS tagging refers to assigning part of speech (e.g., noun, verb, adjective) to a corpus (words in a text). POS tagging is useful for a variety of NLP tasks including identifying named entities, inferring semantic information, and building parse trees.

Here is an example of POS tagging conducted on the sentence, “This is an article.”

“this” | “is” | “an” | “article” | “.” |

determiner | auxiliary verb | determiner | noun | punctuation |

Entity linking is the process of detecting named entities and linking them to a prearranged category. For example, linking “Barack Obama” to “Person”, and “California” to “Place”. Entities from input text are mapped and linked according to a knowledge base (data sets with lists of words and their associated category).

The entity linking process is also composed of several two subprocesses, two of them being named entity recognition and named entity disambiguation. Both are often used interchangeably but actually differ slightly.

Named entity recognition: Identifying and tagging named entities in a sentence.

Named entity disambiguation: Identifying the right entity from other entities with the same name (e.g., apple and Apple).

Chunking refers to the process of identifying and extracting phrases from text data. Similar to tokenization (separating sentences into individual words), chunking separates entire phrases as a single word. For example, “North America” is treated as a single word rather than separating them into “North” and “America”.

Parsing in natural language processing refers to the process of analyzing the syntactic (grammatical) structure of a sentence. Once the text has been cleaned and the tokens identified, the parsing process segregates every word and determines the relationships between them.

By analyzing the relationship between these individual tokens, the NLP model can ascertain any underlying patterns. These patterns are crucial for further tasks such as sentiment analysis, machine translation, and grammar checking.

Computers were initially created to automate mathematical calculations. For example, the Ancient Greeks created the Antikythera mechanism to calculate the timing of astronomical events such as eclipses.

Recently, scientists have engineered computers to go beyond processing numbers into understanding human language and communication. Aside from merely running data through a formulaic algorithm to produce an answer (like a calculator), computers can now also “learn” new words like a human.

In other words, computers are beginning to complete tasks that previously only humans could do. This advancement in computer science and natural language processing is creating ripple effects across every industry and level of society.

Since computers can process exponentially more data than humans, NLP allows businesses to scale up their data collection and analyses efforts. With natural language processing, you can examine thousands, if not millions of text data from multiple sources almost instantaneously.

Some important use cases include analyzing interview transcriptions, market research survey forms, social media comments, and so on.

Natural language processing optimizes work processes to become more efficient and in turn, lower operating costs. NLP models can automate menial tasks such as answering customer queries and translating texts, thereby reducing the need for administrative workers.

Moreover, automation frees up your employees’ time and energy, allowing them to focus on strategizing and other tasks. As a result, your organization can increase its production and achieve economies of scale.

Natural language processing improves B2C, B2B, and B2G companies’ end-user experience. Organizations are constantly communicating with one another, be it through emails, texts, or social media posts.

Since NLP tools are online 24/7, your end-users have access to customer service anytime. You can also use NLP to improve internal stakeholder workflows, for example by automatically classifying emails by urgency.

Natural language processing tools provide in-depth insights and understanding into your target customers’ needs and wants. Marketers often integrate NLP tools into their market research and competitor analysis to extract possibly overlooked insights.

This information that your competitors don’t have can be your business’ core competency and gives you a better chance to become the market leader. Rather than assuming things about your customers, you’ll be crafting targeted marketing strategies grounded in NLP-backed data.

We rely on computers to communicate and work with each other, especially during the ongoing pandemic. To that end, computers must be able to interpret and generate responses accurately.

Every organization can apply natural language processing to enhance their work processes. Let’s take a look at 5 use cases of natural language processing:

The most common application of natural language processing in customer service is automated chatbots. Chatbots receive customer queries and complaints, analyze them, before generating a suitable response.

You can also continuously train them by feeding them pre-tagged messages, which allows them to better predict future customer inquiries. One such example of pre-tagged words is “how much” and “price”. As a result, the chatbot can accurately understand an incoming message and provide a relevant answer.

A well-trained chatbot can provide standardized responses to frequently asked questions, thereby saving time and labor costs – but not completely eliminating the need for customer service representatives.

Chatbots may answer FAQs, but highly specific or important customer inquiries still require human intervention. Thus, you can train chatbots to differentiate between FAQs and important questions, and then direct the latter to a customer service representative on standby.

You can also utilize NLP to detect sentiment in interactions and determine the underlying issues your customers are facing. For example, sentiment analysis tools can find out which aspects of your products and services that customers complain about the most.

Natural language processing involves interpreting input and responding by generating a suitable output. In this case, analyzing text input from one language and responding with translated words in another language.

Machine translation allows for seamless communication among multilingual employees.

Traditionally, companies would hire employees who can speak a single language for easier collaboration. However, in doing so, companies also miss out on qualified talents simply because they do not share the same native language.

NLP applications such as machine translations could break down those language barriers and allow for more diverse workforces. In turn, your organization can reach previously untapped markets and increase the bottom line.

Moreover, NLP tools can translate large chunks of text at a fraction of the cost of human translators. Of course, machine translations aren’t 100% accurate, but they consistently achieve 60-80% accuracy rates – good enough for most business communication.

The global market of NLP in healthcare and life sciences is expected to grow at a CAGR of 19.0% to reach 4.3 billion USD by 2026.

Hospitals are already utilizing natural language processing to improve healthcare delivery and patient care.

For instance, NLP machines can designate ICD-10-CM codes for every patient. The ICD-10-CM code records all diagnoses, symptoms, and procedures used when treating a patient. With this information in hand, doctors can easily cross-refer with similar cases to provide a more accurate diagnosis to future patients.

NLP models are also frequently used in encrypted documentation of patient records. All sensitive information about a patient must be protected in line with HIPAA. Since handwritten records can easily be stolen, healthcare providers rely on NLP machines because of their ability to document patient records safely and at scale.

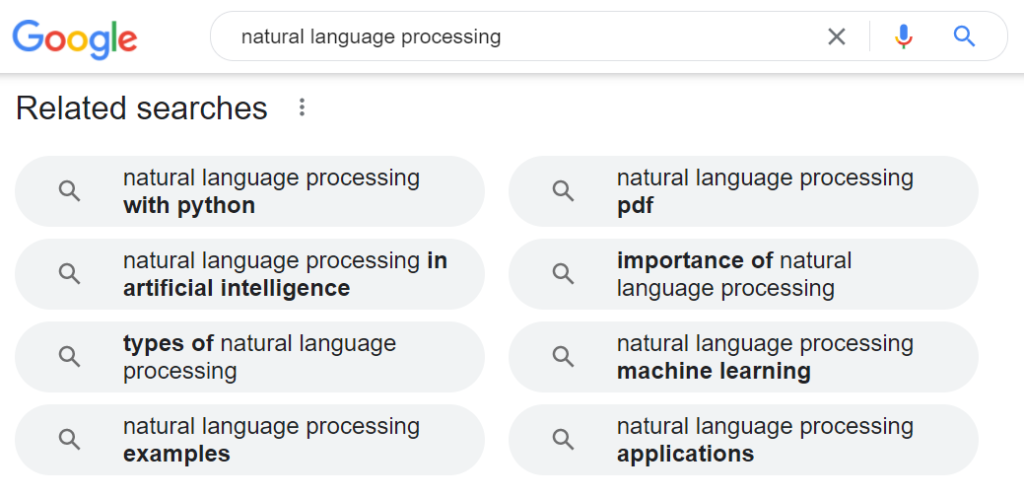

Google incorporates natural language processing into its algorithms to provide the most relevant results on Google SERPs. Back then, you could improve a page’s rank by engaging in keyword stuffing and cloaking.

However, Google’s current algorithms utilize NLP to crawl through pages like a human, allowing them to detect unnatural keyword usages and automatically generated content. Moreover, Googlebot (Google’s Internet crawler robot) will also assess the semantics and overall user experience of a page.

In other words, you must provide valuable, high-quality content if you want to rank on Google SERPs. You can do so with the help of modern SEO tools such as SEMrush and Grammarly. These tools utilize NLP techniques to enhance your content marketing strategy and improve your SEO efforts.

For example, SEO keyword research tools understand semantics and search intent to provide related keywords that you should target. Spell-checking tools also utilize NLP techniques to identify and correct grammar errors, thereby improving the overall content quality.

Depending on your organization’s needs and size, your market research efforts could involve thousands of responses that require analyzing. Rather than manually sifting through every single response, NLP tools provide you with an immediate overview of key areas that matter.

For example, text classification and named entity recognition techniques can create a word cloud of prevalent keywords in the research. This information allows marketers to then make better decisions and focus on areas that customers care about the most.

Some market research tools also use sentiment analysis to identify what customers feel about a product or aspects of their products and services. The sentiment analysis models will present the overall sentiment score to be negative, neutral, or positive.

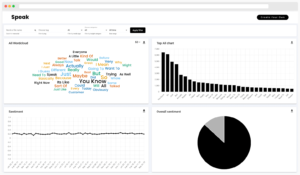

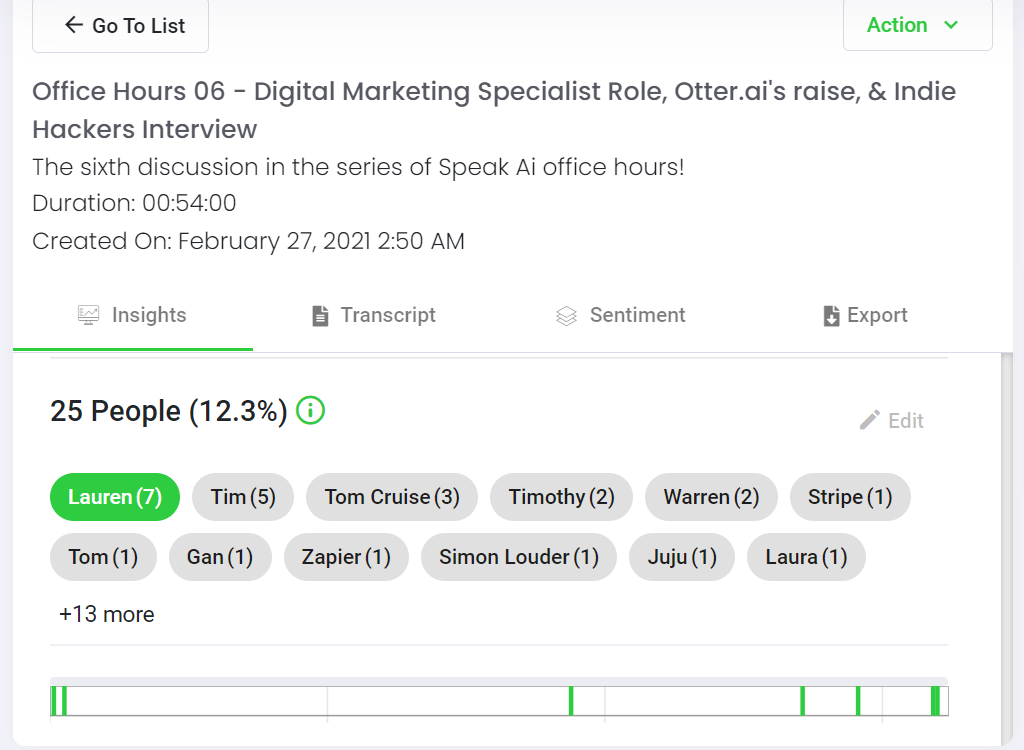

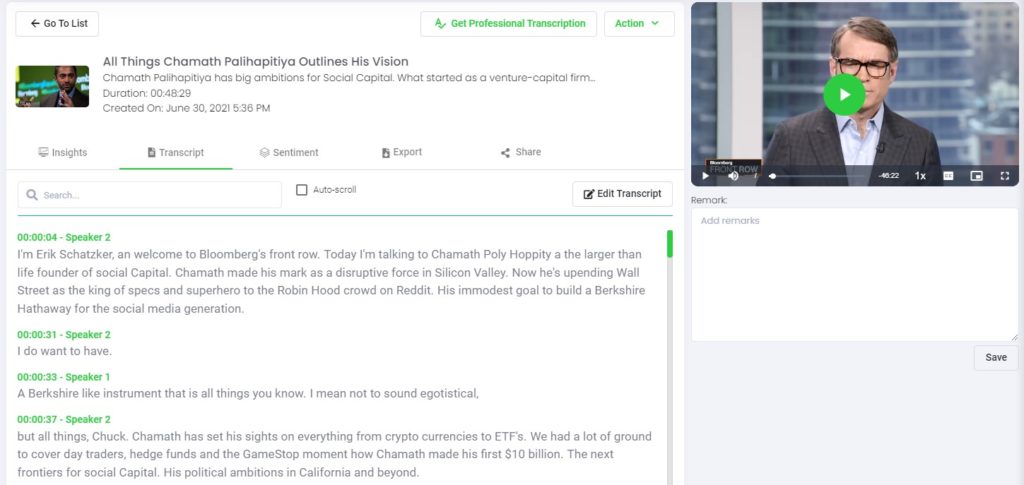

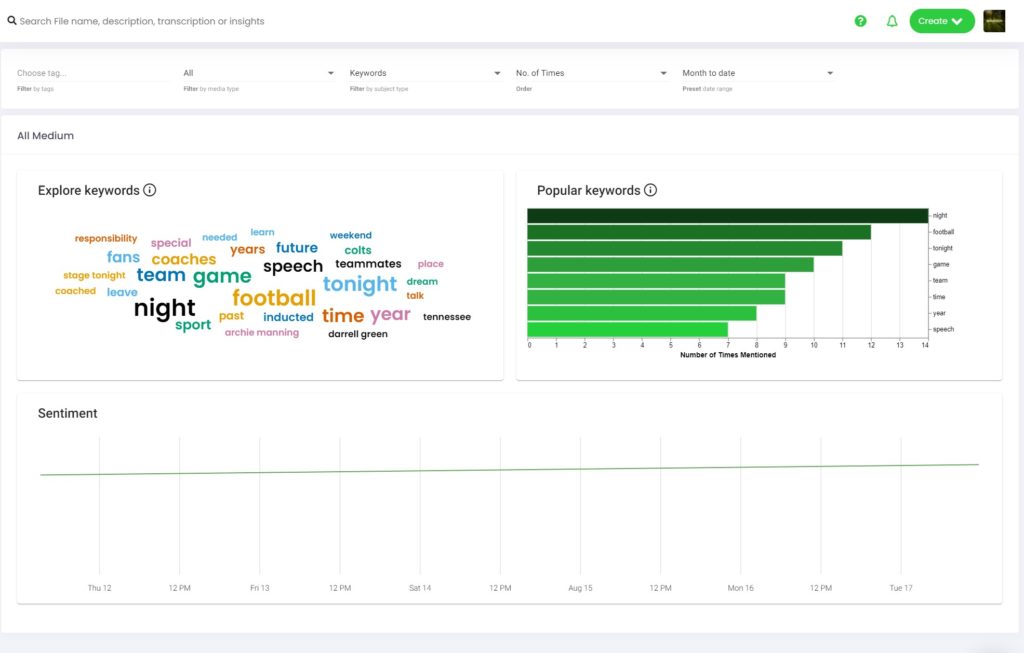

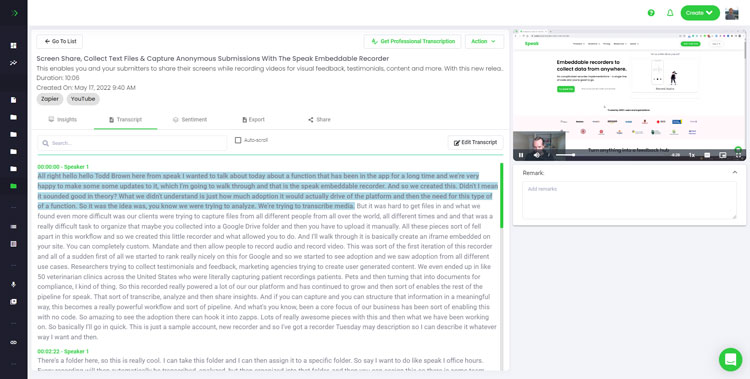

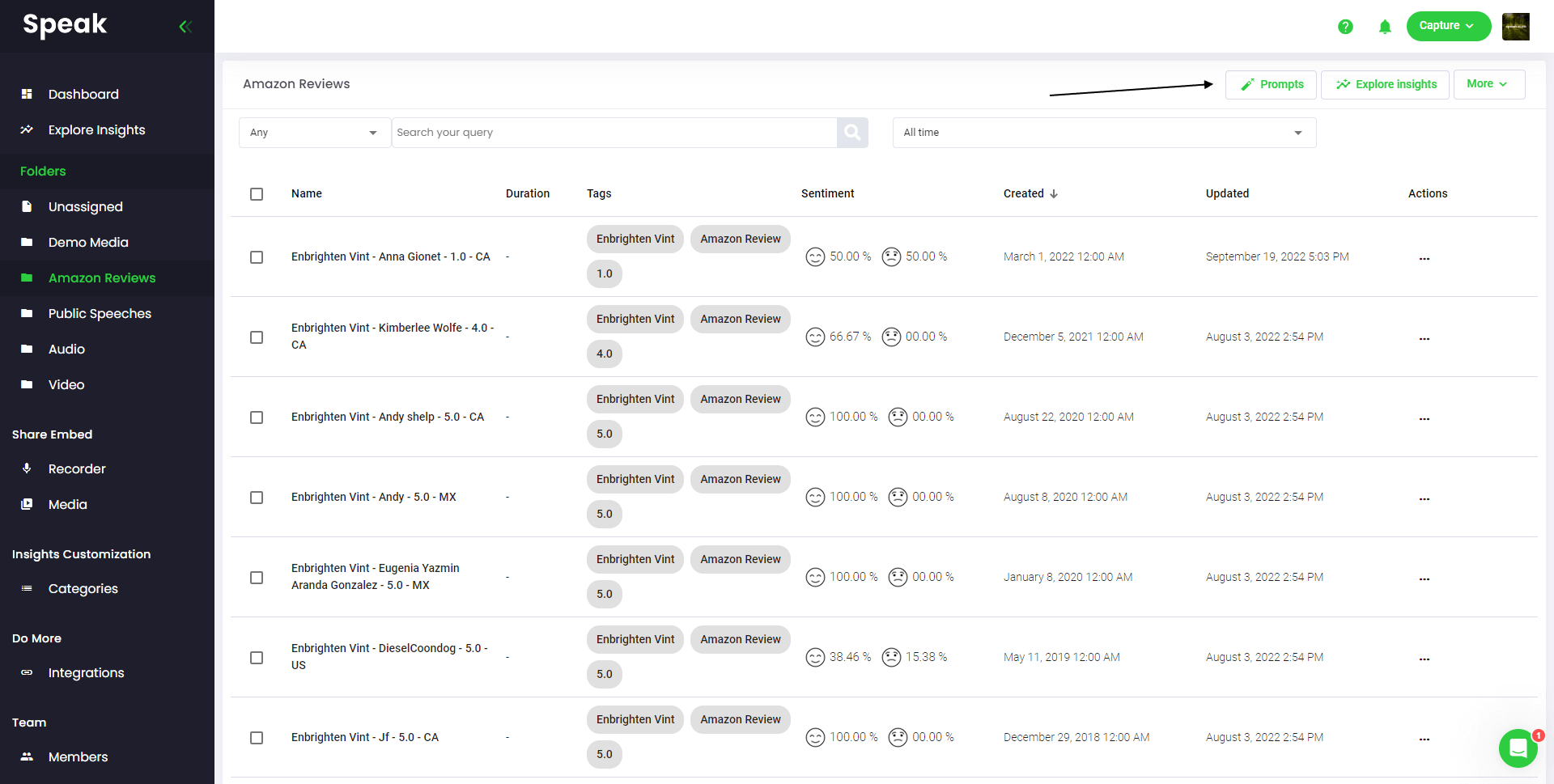

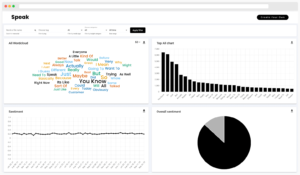

One such market research tool with sentiment analysis is Speak. Our comprehensive suite of tools records qualitative research sessions and automatically transcribes them with great accuracy.

After these precise datasets are ready, our text analytics algorithm then processes the transcripts’ content and tags them accordingly. These tags include speakers, named entities, recurring keywords, or any custom category that you can preset.

We also utilize natural language processing techniques to identify the transcripts’ overall sentiment. Our sentiment analysis model is well-trained and can detect polarized words, sentiment, context, and other phrases that may affect the final sentiment score.

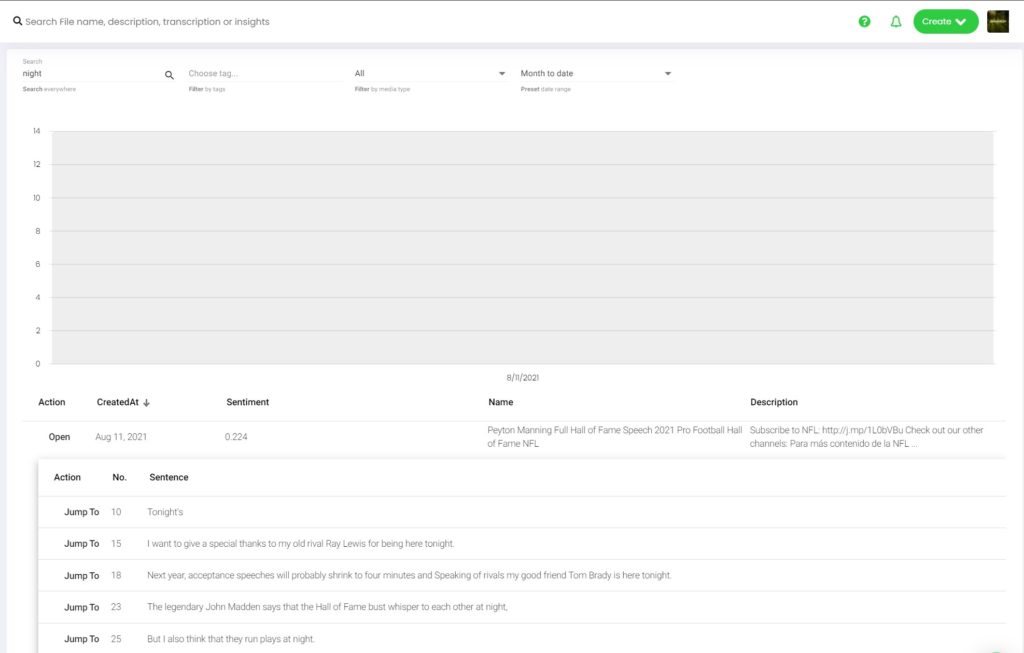

Then, Speak automatically visualizes all those key insights in the form of word clouds, keyword count scores, and sentiment charts (as shown above). You can even search for specific moments in your transcripts easily with our intuitive search bar.

Best of all, our centralized media database allows you to do everything in one dashboard – transcribing, uploading media, text and sentiment analysis, extracting key insights, exporting as various file types, and so on.

This allows you to seamlessly share vital information with anyone in your organization no matter its size, allowing you to break down silos, improve efficiency, and reduce administrative costs.

Do you want to visualize keywords and phrases with Speak? Use our free online word cloud generator to instantly create word clouds of filler words and more.

Join 7,000+ individuals and teams who are relying on Speak Ai to capture and analyze unstructured language data for valuable insights. Start your trial or book a demo to streamline your workflows, unlock new revenue streams and keep doing what you love.

Natural language processing, machine learning, and AI have become a critical part of our everyday lives. Whenever a computer conducts a task involving human language, NLP is involved. This ranges from chatbots, market research, and even in text messaging.

Despite the many benefits and applications of NLP, it still has its flaws in interpreting human language. There are many challenges of natural language processing, including:

Homonyms (different words with similar spelling and pronunciation) are one of the main challenges in natural language processing. These words may be easily understood by native speakers of that language because they interpret words based on context.

For example, “bark” can either indicate a dog barking or a tree bark. Well-trained NLP models through continuous feeding can easily discern between homonyms. However, new words and definitions of existing words are also constantly being added to the English lexicon.

This makes it difficult for NLP models to keep up with the evolution of language and could lead to errors, especially when analyzing online texts filled with emojis and memes.

When we converse with other people, we infer from body language and tonal clues to determine whether a sentence is genuine or sarcastic. However, we sometimes fall prey to sarcastic comments too.

For example, we sometimes can’t tell whether our relatives asking about our marriage plans are being sarcastic or genuinely curious.

Since we ourselves can’t consistently distinguish sarcasm from non-sarcasm, we can’t expect machines to be better than us in that regard. Nonetheless, sarcasm detection is still crucial such as when analyzing sentiment and interview responses.

Words, phrases, and even entire sentences can have more than one interpretation. Sometimes, these sentences genuinely do have several meanings, often causing miscommunication among both humans and computers. These genuine ambiguities are quite uncommon and aren’t a serious problem.

After all, NLP models are based on human engineers so we can’t expect machines to perform better. However, some sentences have one clear meaning but the NLP machine assigns it another interpretation. These computer ambiguities are the main issues that data scientists are still struggling to resolve because inaccurate text analysis can result in serious issues.

For example, let’s take a look at this sentence, “Roger is boxing with Adam on Christmas Eve.” The word “boxing” usually means the physical sport of fighting in a boxing ring. However, when read in the context of Christmas Eve, the sentence could also mean that Roger and Adam are boxing gifts ahead of Christmas.

NLP models are trained by feeding them data sets, which are created by humans. However, humans have implicit biases that may pass undetected into the machine learning algorithm.

For example, gender stereotypes that compare “man” and “woman” with “computer programmer” and “homemaker”. Racial and religious stereotypes inherent in human researchers may also transfer into machine learning algorithms.

The best way to address bias in NLP is to remove existing bias in the datasets themselves. This entails identifying your own implicit biases and editing the dataset to be bias-free. For example, researchers can diversify the dataset by adding more data. For instance, by adding data that associates “woman” with “computer programmer”.

Natural language processing has been making progress and shows no sign of slowing down. According to Fortune Business Insights, the global NLP market is projected to grow at a CAGR of 29.4% from 2021 to 2028.

One reason for this exponential growth is the pandemic causing demand for communication tools to rise. For example, smart home assistants, transcription software, and voice search.

With this in mind, more than one-third of companies have adopted artificial intelligence as of 2021. That number will only increase as organizations begin to realize NLP’s potential to enhance their operations.

We’ve extensively walked through what is natural language processing, how it works, and some of its common tasks and use cases. By now, you may be intrigued by NLP and are itching to try it out for yourself, which you can!

Simply type something into our text and sentiment analysis tools, and then hit the analyze button to see the results immediately.

Natural language processing has been around for decades. As a result, the data science community has built a comprehensive NLP ecosystem that allows anyone to build NLP models at the comfort of their homes.

NLP communities aren’t just there to provide coding support; they’re the best places to network and collaborate with other data scientists. This could be your accessway to career opportunities, helpful resources, or simply more friends to learn about NLP together.

Since NLP is part of data science, these online communities frequently intertwine with other data science topics. Hence, you'll be able to develop a complete repertoire of data science knowledge and skills.

There are dozens of communities and blogs that you can join. We’ve curated some of our favorite NLP communities that you should join.

Reddit: Reddit is a social media platform with thousands of subreddits (smaller communities), and every respective subreddit focuses on a specific topic. For example, r/datascience, r/ linguistics, r/Python, r/LanguageTechnology, r/machinelearning, and r/learnmachinelearning. All the data science and NLP subreddits total more than 100 million users.

Github: Github is one of the largest data science communities joined by more than 73 million users and 4 million organizations. It is a platform where users can collaborate and build software together, and share useful information such as datasets and tutorials.

Stack Overflow: Stack Overflow is the go-to Q&A platform for both professionals and students learning data science. If you’ve ever run into a brick wall when building an NLP model, chances are there’s already a solution for that issue on Stack Overflow.

Kaggle: Kaggle is a massive data science community where users can share datasets, join competitions, enroll in courses, and create NLP models with one of their 400,000 public notebooks.

Stanford NLP Group: The Stanford NLP group is a team of Stanford students, programmers, and faculty members including NLP experts Christopher Manning and Dan Jurafsky. They provide a constant stream of blog posts, tweets, publications, open-source software, and online courses.

The main way to develop natural language processing projects is with Python, one of the most popular programming languages in the world. More specifically, with Python NLTK. Python NLTK is a suite of tools created specifically for computational linguistics.

Aside from a broad umbrella of tools that can handle any NLP tasks, Python NLTK also has a growing community, FAQs, and recommendations for Python NLTK courses. Moreover, there is also a comprehensive guide on using Python NLTK by the NLTK team themselves.

Since natural language processing is a decades-old field, the NLP community is already well-established and has created many projects, tutorials, datasets, and other resources.

One example is this curated resource list on Github with over 130 contributors. This list contains tutorials, books, NLP libraries in 10 programming languages, datasets, and online courses. Moreover, this list also has a curated collection of NLP in other languages such as Korean, Chinese, German, and more.

The standard book for NLP learners is “Speech and Language Processing” by Professor Dan Jurfasky and James Martin. They are renowned professors of computer science at Stanford and the University of Colorado Boulder.

Jurafsky in particular is highly well-known in the NLP community, having published many enduring publications on natural language processing. The book is also freely available online and is continuously updated with draft chapters.

Another book that we recommend is “Handbook of Natural Language Processing” edited by Nitin Indurikya and Fred Damerau. Every chapter of this book is written by field experts, peer-reviewed, before being collected and collected by Indurikya and Damerau.

There is an abundance of video series dedicated to teaching NLP – for free. However, that also leads to information overload and it can be challenging to get started with learning NLP.

Among all that noise, we’ve selected three videos and lecture series suitable for both beginners and intermediate NLP learners. Moreover, you can rewatch them at your own pace because they’re a series of lecture videos rather than actual courses to enroll in.

Sentdex: sentdex is a superstar YouTuber who teaches NLP, machine learning, and Python. His in-depth video series is a great entry point for NLP greenhorns because of his ability to explain the topic understandably.

NLP by Dan Jurafsky: Dan Jurafsky, a linguistics and computer science professor from Stanford, provides a comprehensive walkthrough on NLP with his 127-lecture video series. The videos are fairly short at about 10 minutes each and cover essentially every aspect of NLP.

Deep Learning for NLP by Oxford: The entire 13-week lecture series of NLP by the University of Oxford and Deep Learning is saved and readily available on Github. You can access their course materials, reading suggestions, lecture notes, and watch the lecture videos at your own pace.

To start your transcription and analysis, you first need to create a Speak account. No worries, this is super easy to do!

Get a 7-day trial with 30 minutes of free English audio and video transcription included when you sign up for Speak.

To sign up for Speak and start using Speak Magic Prompts, visit the Speak app register page here.

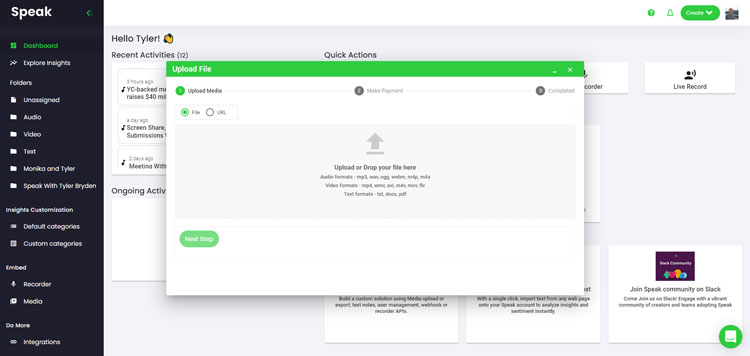

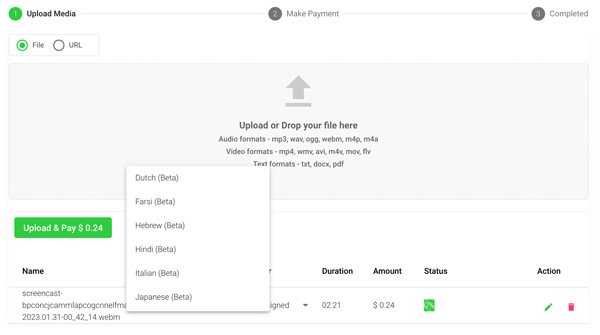

We typically recommend MP4s for video or MP3s for audio.

However, we accept a range of audio, video and text file types.

You can upload your file for transcription in several ways using Speak:

You can also upload CSVs of text files or audio and video files. You can learn more about CSV uploads and download Speak-compatible CSVs here.

With the CSVs, you can upload anything from dozens of YouTube videos to thousands of Interview Data.

You can also upload media to Speak through a publicly available URL.

As long as the file type extension is available at the end of the URL you will have no problem importing your recording for automatic transcription and analysis.

Speak is compatible with YouTube videos. All you have to do is copy the URL of the YouTube video (for example, https://www.youtube.com/watch?v=qKfcLcHeivc).

Speak will automatically find the file, calculate the length, and import the video.

If using YouTube videos, please make sure you use the full link and not the shortened YouTube snippet. Additionally, make sure you remove the channel name from the URL.

As mentioned, Speak also contains a range of integrations for Zoom, Zapier, Vimeo and more that will help you automatically transcribe your media.

This library of integrations continues to grow! Have a request? Feel encouraged to send us a message.

Once you have your file(s) ready and load it into Speak, it will automatically calculate the total cost (you get 30 minutes of audio and video free in the 7-day trial - take advantage of it!).

If you are uploading text data into Speak, you do not currently have to pay any cost. Only the Speak Magic Prompts analysis would create a fee which will be detailed below.

Once you go over your 30 minutes or need to use Speak Magic Prompts, you can pay by subscribing to a personalized plan using our real-time calculator.

You can also add a balance or pay for uploads and analysis without a plan using your credit card.

If you are uploading audio and video, our automated transcription software will prepare your transcript quickly. Once completed, you will get an email notification that your transcript is complete. That email will contain a link back to the file so you can access the interactive media player with the transcript, analysis, and export formats ready for you.

If you are importing CSVs or uploading text files Speak will generally analyze the information much more quickly.

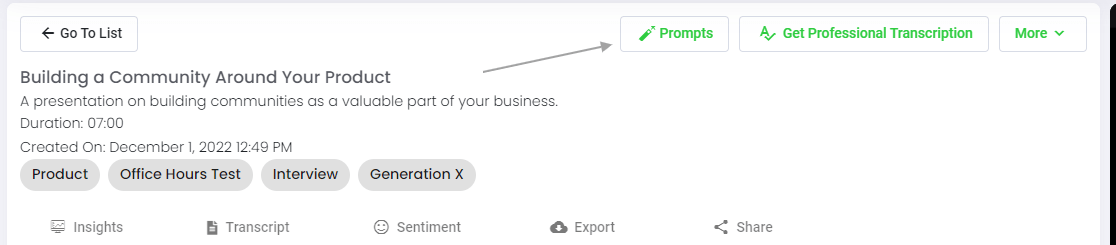

Speak is capable of analyzing both individual files and entire folders of data.

When you are viewing any individual file in Speak, all you have to do is click on the "Prompts" button.

If you want to analyze many files, all you have to do is add the files you want to analyze into a folder within Speak.

You can do that by adding new files into Speak or you can organize your current files into your desired folder with the software's easy editing functionality.

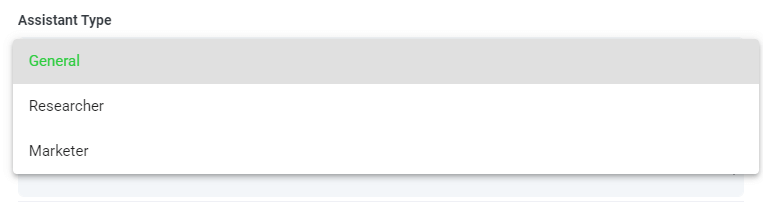

Speak Magic Prompts leverage innovation in artificial intelligence models often referred to as "generative AI".

These models have analyzed huge amounts of data from across the internet to gain an understanding of language.

With that understanding, these "large language models" are capable of performing mind-bending tasks!

With Speak Magic Prompts, you can now perform those tasks on the audio, video and text data in your Speak account.

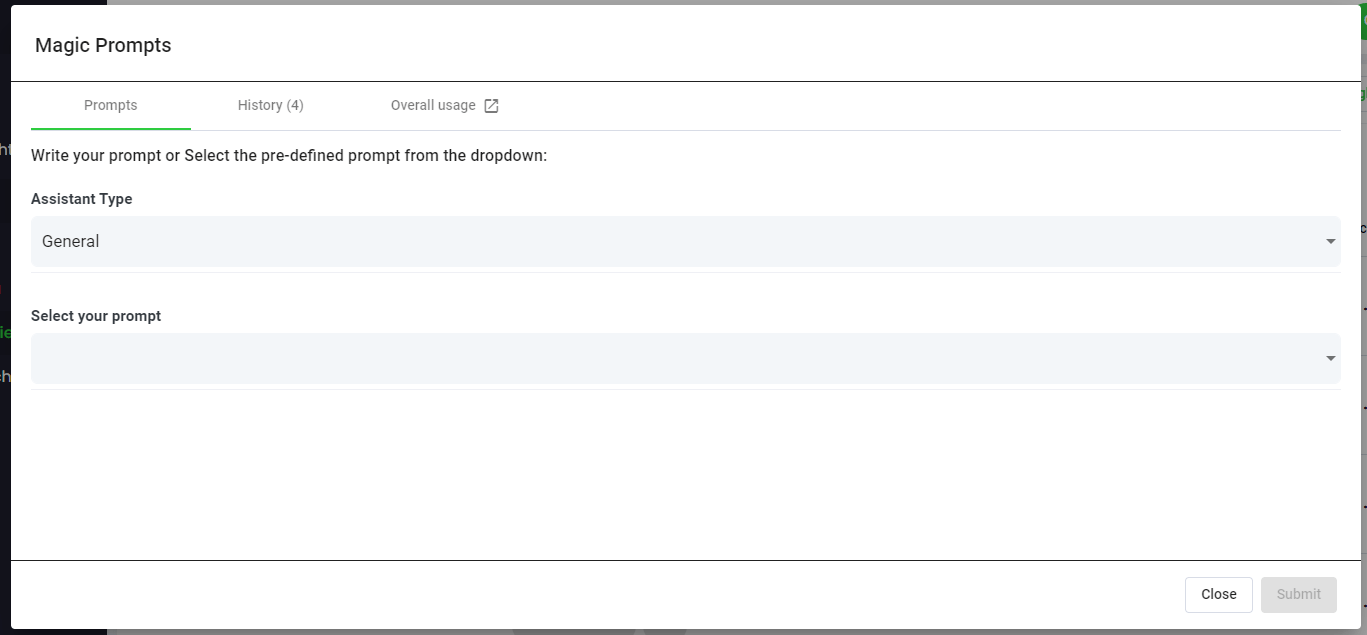

To help you get better results from Speak Magic Prompts, Speak has introduced "Assistant Type".

These assistant types pre-set and provide context to the prompt engine for more concise, meaningful outputs based on your needs.

To begin, we have included:

Choose the most relevant assistant type from the dropdown.

Here are some examples prompts that you can apply to any file right now:

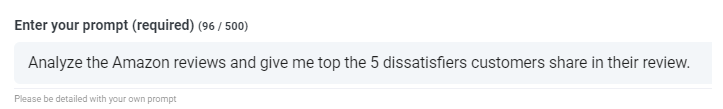

A modal will pop up so you can use the suggested prompts we shared above to instantly and magically get your answers.

If you have your own prompts you want to create, select "Custom Prompt" from the dropdown and another text box will open where you can ask anything you want of your data!

Speak will generate a concise response for you in a text box below the prompt selection dropdown.

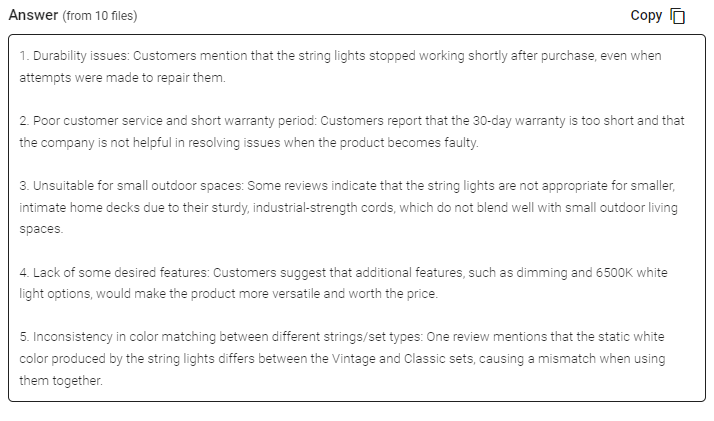

In this example, we ask to analyze all the Interview Data in the folder at once for the top product dissatisfiers.

You can easily copy that response for your presentations, content, emails, team members and more!

Our team at Speak Ai continues to optimize the pricing for Magic Prompts and Speak as a whole.

Right now, anyone in the 7-day trial of Speak gets 100,000 characters included in their account.

If you need more characters, you can easily include Speak Magic Prompts in your plan when you create a subscription.

You can also upgrade the number of characters in your account if you already have a subscription.

Both options are available on the subscription page.

Alternatively, you can use Speak Magic Prompts by adding a balance to your account. The balance will be used as you analyze characters.

Here at Speak, we've made it incredibly easy to personalize your subscription.

Once you sign-up, just visit our custom plan builder and select the media volume, team size, and features you want to get a plan that fits your needs.

No more rigid plans. Upgrade, downgrade or cancel at any time.

When you subscribe, you will also get a free premium add-on for three months!

That means you save up to $50 USD per month and $150 USD in total.

Once you subscribe to a plan, all you have to do is send us a live chat with your selected premium add-on from the list below:

We will put the add-on live in your account free of charge!

What are you waiting for?

If you have friends, peers and followers interested in using our platform, you can earn real monthly money.

You will get paid a percentage of all sales whether the customers you refer to pay for a plan, automatically transcribe media or leverage professional transcription services.

Use this link to become an official Speak affiliate.

It would be an honour to personally jump on an introductory call with you to make sure you are set up for success.

Just use our Calendly link to find a time that works well for you. We look forward to meeting you!

Natural language processing is the rapidly advancing field of teaching computers to process human language, allowing them to think and provide responses like humans. NLP has led to groundbreaking innovations across many industries from healthcare to marketing.

Some of these applications include sentiment analysis, automatic translation, and data transcription. Essentially, NLP techniques and tools are used whenever someone uses computers to communicate with another person.

In that sense, every organization is using NLP even if they don’t realize it. Consumers too are utilizing NLP tools in their daily lives, such as smart home assistants, Google, and social media advertisements.

Natural language processing, machine learning, and AI have made great strides in recent years. Nonetheless, the future is bright for NLP as the technology is expected to advance even more, especially during the ongoing COVID-19 pandemic.

Get a 7-day fully-featured trial.

How to Transcribe a Recording to Text Our no-code transcription tool enables you to convert an audio recording to text in only two steps. Find

How to Transcribe a YouTube Video You don’t need to convert a YouTube video into mp4 to transcribe it. Simply upload the URL to Speak

How to Transcribe Audio and Video to Text in 2 Minutes (2022 Guide) Learn how to transcribe audio and video to text with Speak Ai

The Complete Guide to Text Analytics (2022) Text analytics (or text mining) refers to using natural language processing techniques to extract key insights from chunks

All About Sentiment Analysis: The Ultimate Guide You may have heard of sentiment analysis before, but what exactly is it, and why are organizations so

A Simple Guide on How to Do Market Research in 2021 Learn some simple steps to get you started on how to do market research,

Powered by Speak Ai Inc. Made in Canada with

Use Speak's powerful AI to transcribe, analyze, automate and produce incredible insights for you and your team.