Is GPT-4 Multimodal?

GPT-4 is an artificial intelligence system developed by OpenAI that is capable of generating human-like text. It has been described as the most powerful language model ever created, and many experts believe that it is the next step in natural language processing (NLP). But what many people don't know is whether or not GPT-4 is multimodal. In this blog post, we'll explore the question of whether or not GPT-4 is multimodal.

What is Multimodal AI?

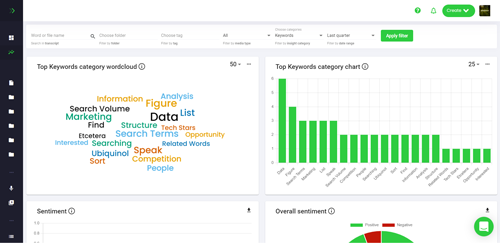

Before we can discuss whether or not GPT-4 is multimodal, we must first understand what multimodal AI is. In a nutshell, multimodal AI is an AI system that is capable of processing data from multiple sources. For example, a multimodal AI system might use images, text, audio, and other data sources to make predictions or identify patterns. This type of AI is becoming increasingly important as more data sources become available and as more data is used for training AI systems.

How Does GPT-4 Work?

GPT-4 is an AI system that uses a type of artificial neural network known as a Transformer. This type of network is capable of analyzing large amounts of data and making predictions or identifying patterns. GPT-4 is trained on a large dataset of text, which it uses to generate human-like text.

Is GPT-4 Multimodal?

GPT-4 is not technically a multimodal AI system. It is trained on a dataset of text, so it is only capable of analyzing text data. However, GPT-4 can be used in conjunction with other AI systems to create multimodal AI systems. For example, GPT-4 can be used to generate text based on images or audio data.

Conclusion

GPT-4 is an incredibly powerful language model that has the potential to revolutionize natural language processing. While it is not a multimodal AI system, it can be used in conjunction with other AI systems to create multimodal AI systems. This could open up a whole new world of possibilities for AI-powered applications.