Professional transcription services without the hassle.

Forget the back and forth emails - manage orders and organize your transcripts in one place.

Get a 7-day fully-featured trial.

Get a 7-day fully-featured trial.

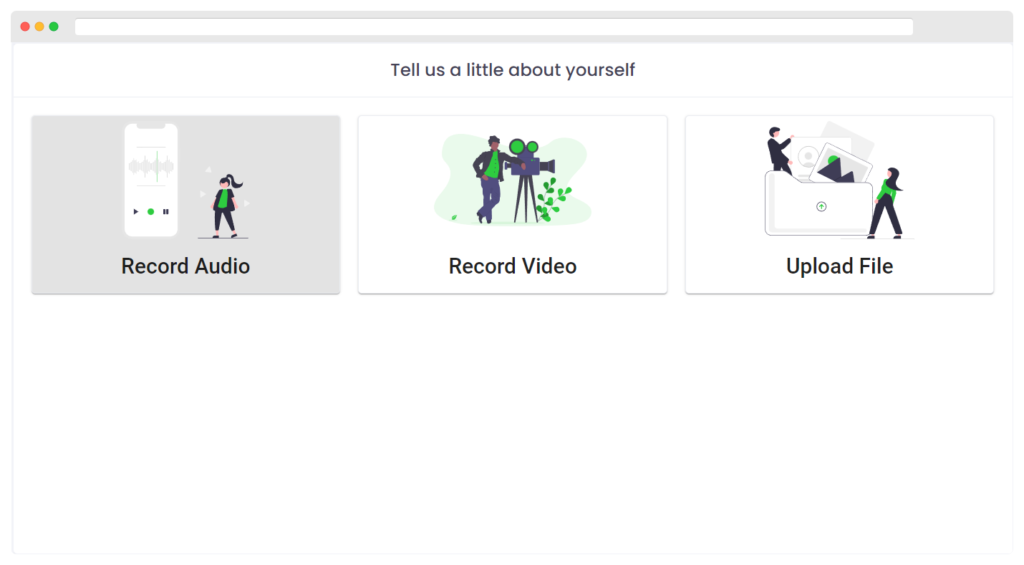

Upload video or audio files from a personal library or any publicly available URL to Speak Ai and get your transcript in minutes.

You can also use our built-in recorders or capture recordings from anywhere with our embeddable audio and video recorders.

We guarantee 99% accuracy with all our transcripts and have a solid team to deliver on our promises. Transcripts are uploaded directly to your account for approval.

You can also get your transcripts back in as little as 48 hours with our rush service.

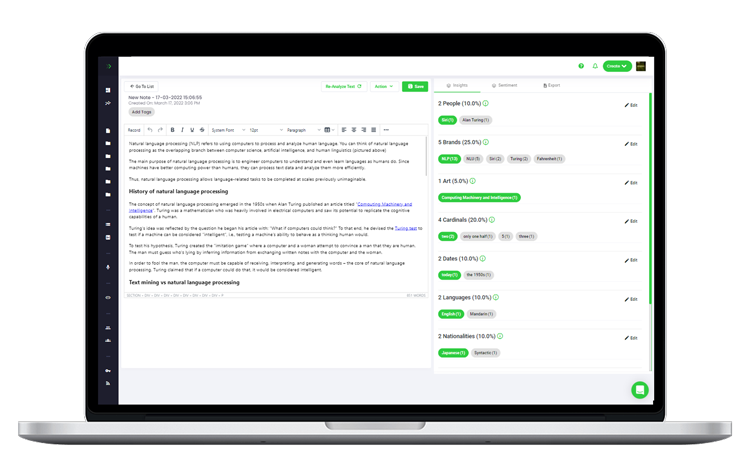

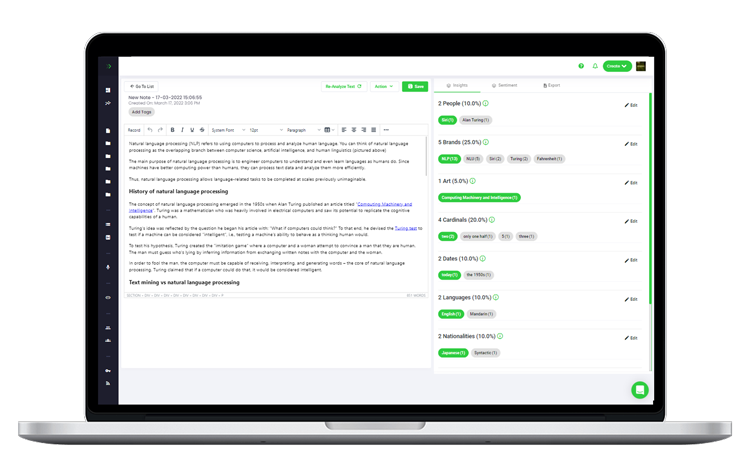

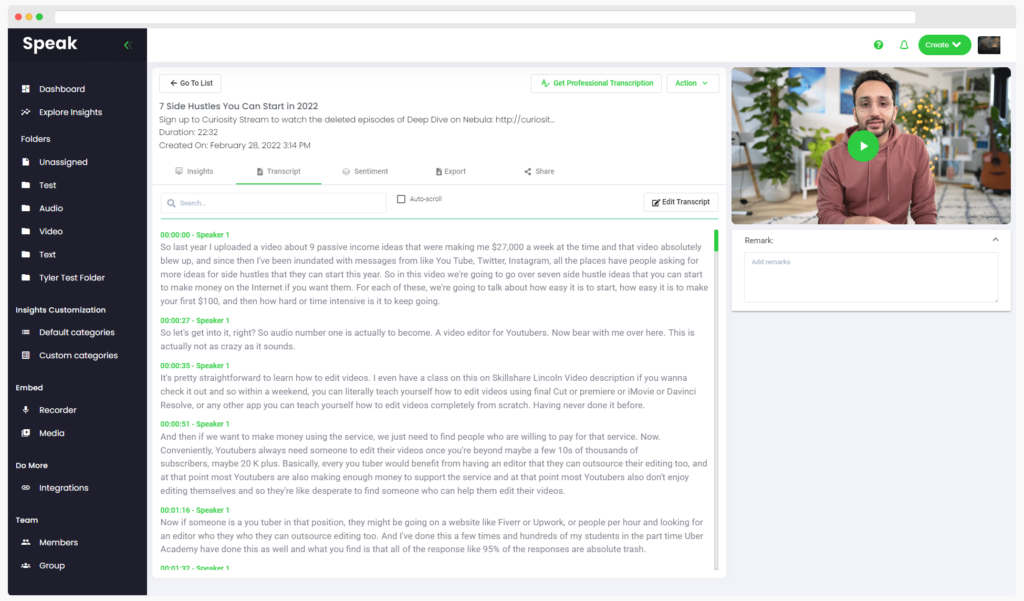

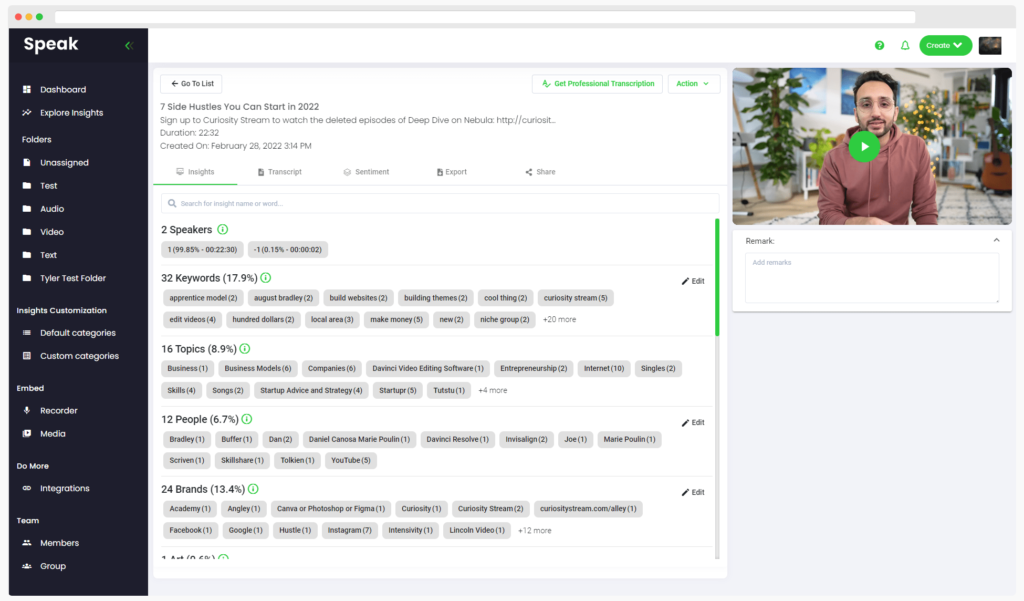

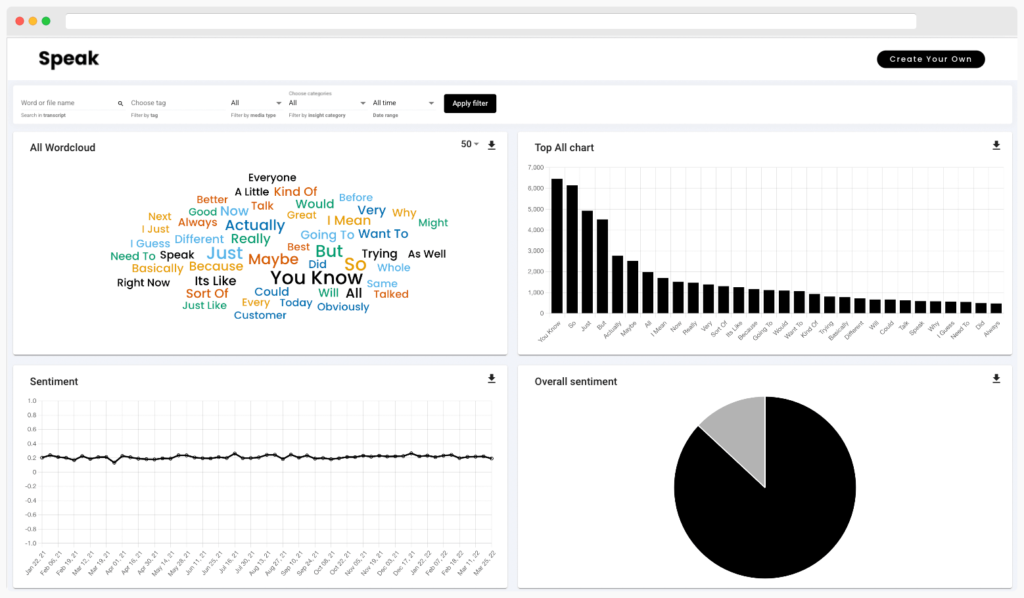

Find keyword and topic trends along with sentiment analysis of your any file you upload into Speak.

By enabling navigation by keywords, you can find important moments and useful information in seconds.

If your media contains industry specific terminology, it's easy to train our AI by building out a custom vocabulary library specific to your account.

Easily create a central hub for all your interviews, team meetings, webinars, sales calls or personal notes.

Extend your institutional memory by making it easy to find important moments and information you need with advanced search, intuitive tagging and visualizations.

Our goal is to let you bring your entire workflow together seamlessly.

Native Zoom and Vimeo integrations allow you to sync entire libraries of videos or recordings.

You can also build custom automations through our various Zapier templates or with Speak APIs.

Avoid exposing sensitive information with integrated PII redaction.

Your transcript gets stiched to the original media file in one click.

Automatically identify important keywords, topics and sentiment.

Create shareable custom email, Word or PDF reports.

Clean up your transcripts quickly with systemwide find and replace.

Improve engagement and accessibility with clickable transcripts.

Get a 7-day fully-featured trial.

Currently we only have an English transcription team but we have plans to add a number of other languages soon!

We price ourselves competitively with the industry standard.

At $1.50 per audio minute, our prices are on par with most other transcription companies. We also value our transcribers work, which means paying them a decent wage.

For larger transcription jobs and bulk uploads, feel free to contact our customer success team who will be happy to discuss enterprise pricing for your needs.

We have a team of professional transcriptionists to make sure you receive high-quality transcripts. All our transcriptionists are vetted native English speakers who transcribe files for you.

Yes! We support popular formats including PDFs, Word Docs, TXT and SRT files. We also offer the option to share your media using our interactive media player at no additional cost.

We work with audio and video recordings in MP3, MP4, WMV, AIF, M4A, MOV, AVI, WMA, AAC and WAV to name a few.

You can also upload any media links from publicly available urls.

If you have a file in a format that doesn't work, please contact us and we'll work with you to get your job done.

You can find an overview of our security and privacy policies here.

Powered by Speak Ai Inc. Made in Canada with

Use Speak's powerful AI to transcribe, analyze, automate and produce incredible insights for you and your team.